Setting up Gluetun for Ollama

It all started with wanting to have a pod use a Wireguard connection to be able to reach something on the Wireguard VPN network. It led to this rabbit hole of research, to figure out how to get Gluetun, Wireguard, and Talos to all play nicely together. It took me way longer than I would’ve liked or expected, so I wanted to document it here in case someone else could benefit from it.

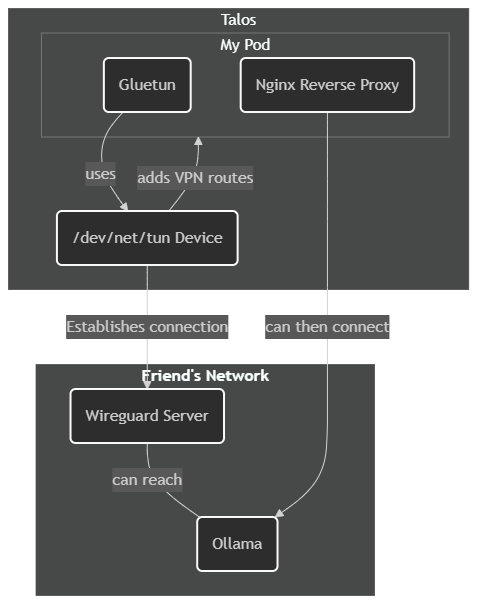

I wanted to (ab)use my friend’s Ollama instance since they have a much nicer GPU than I do, but I wanted to be able to utilize their Ollama from a local address. So my plan was the following:

- Use an Nginx reverse proxy to accept requests and forward them to his Ollama IP address

- Create a networking device on my Talos nodes, that Gluetun could then use to establish VPN connection

- Use Gluetun (and the networking device) to establish the Wireguard connection

- Since containers in the same pod share networking, since Gluetun adds the routes, the Nginx container is also able to use the routes (therefore reaching the Ollama endpoint)

I wanted to be able to use Gluetun with Wireguard, and I struggled to understand how it should work. These are my notes and how I got it to work.

What made this experience extra fun was that my friend uses the network 192.168.1.1/16 internally, but then uses a BGP subnet of 10.10.0.0/16. So even though I already had a 192.168.3.0 route (as you’ll see documented and explained), I expected to be able to reach his BGP subnet of 10.10.0.0/16, which I couldn’t. I expected to since using the same configuration with wg-quick resulted in me being able to reach his BGP subnet.

Ollama is running/ # route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default 10.244.2.117 0.0.0.0 UG 0 0 0 eth0

10.10.0.0 * 255.255.0.0 U 0 0 0 wg0

10.244.2.117 * 255.255.255.255 UH 0 0 0 eth0

192.168.3.0 * 255.255.255.0 U 0 0 0 wg0

So I needed to run this explicit command as I couldn’t reach his BGP subnet without it (wg-quick does quite a bit of magic to even let this happen without any additional work, but whatever):

ip route add 10.10.0.0/16 dev wg0

I was going to have to add this route to their BGP subnet manually to Gluetun using iptables, which I’ve documented below.

Setting up the device plugin

Below is the YAML that I used to create the device plugin so that Gluetun would work with Talos, which creates a new device at /dev/net/tun:

Device Plugin YAML

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: generic-device-plugin

namespace: kube-system

labels:

app.kubernetes.io/name: generic-device-plugin

spec:

selector:

matchLabels:

app.kubernetes.io/name: generic-device-plugin

template:

metadata:

labels:

app.kubernetes.io/name: generic-device-plugin

spec:

priorityClassName: system-node-critical

tolerations:

- operator: "Exists"

effect: "NoExecute"

- operator: "Exists"

effect: "NoSchedule"

containers:

- image: squat/generic-device-plugin

args:

- --device

- |

name: tun

groups:

- count: 1000

paths:

- path: /dev/net/tun

name: generic-device-plugin

resources:

requests:

cpu: 50m

memory: 10Mi

limits:

cpu: 50m

memory: 20Mi

ports:

- containerPort: 8080

name: http

securityContext:

privileged: true

volumeMounts:

- name: device-plugin

mountPath: /var/lib/kubelet/device-plugins

- name: dev

mountPath: /dev

volumes:

- name: device-plugin

hostPath:

path: /var/lib/kubelet/device-plugins

- name: dev

hostPath:

path: /dev

updateStrategy:

type: RollingUpdate

Creating the Gluetun application

Below is the ArgoCD application that I created to deploy an Nginx reverse proxy so that

Then I needed to create an ArgoCD application, that contained a single pod that had two containers - Gluetun and Nginx. Since Gluetun is in the same pod as the Nginx container, and containers within the same pod share networking - if we get Gluetun to be able to reach the Ollama endpoint across the Wireguard connection, then the Nginx reverse proxy container will as well.

Gluetun ArgoCD Application

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: ollama-proxy

namespace: argocd

spec:

project: default

source:

chart: app-template

repoURL: https://bjw-s.github.io/helm-charts/

targetRevision: 3.7.3

helm:

values: |

controllers:

main:

annotations:

reloader.stakater.com/auto: "true"

type: deployment

pod:

securityContext:

fsGroup: 101

fsGroupChangePolicy: "OnRootMismatch"

dnsConfig:

options:

- name: ndots

value: "1"

containers:

main:

nameOverride: nginx

image:

repository: nginx

tag: 1.25-alpine

env:

TZ: "America/Los_Angeles"

gluetun:

image:

repository: ghcr.io/qdm12/gluetun

tag: latest@sha256:183c74263a07f4c931979140ac99ff4fbc44dcb1ca5b055856ef580b0fafdf1c

envFrom:

- secretRef:

name: gluetun-secrets

securityContext:

capabilities:

add:

- NET_ADMIN

resources:

limits:

squat.ai/tun: "1"

probes:

liveness:

enabled: false

custom: true

spec:

initialDelaySeconds: 20 # Time to wait before starting the probe after startup probe succeeds

periodSeconds: 30 # How often to perform the probe

timeoutSeconds: 30 # Number of seconds after which the probe times out

failureThreshold: 3 # Number of times to try the probe before considering the container not ready

httpGet:

path: /

port: 9999

service:

main:

controller: main

enabled: true

ports:

http:

port: 80

gluetun:

enabled: true

protocol: HTTP

port: 8000

persistence:

config:

enabled: true

type: configMap

name: ollama-proxy-config

advancedMounts:

main:

main:

- path: /etc/nginx

iptables:

enabled: true

type: configMap

name: iptable-rules

advancedMounts:

main:

gluetun:

- path: /iptables

destination:

server: "https://kubernetes.default.svc"

namespace: ollama

syncPolicy:

syncOptions:

- CreateNamespace=true

automated:

prune: true

selfHeal: true

managedNamespaceMetadata:

labels:

pod-security.kubernetes.io/enforce: privileged

Creating the Secrets required

Below is an example of my Kubernetes secret named gluetun-secrets that contains the environment variables that are used within the Gluetun container.

In my instance, Gluetun wasn’t happy with Wireguard unless I used these variables:

- WIREGUARD_PRIVATE_KEY

- WIREGUARD_PUBLIC_KEY

- WIREGUARD_PRESHARED_KEY

Gluetun Secrets

apiVersion: v1

kind: Secret

metadata:

name: gluetun-secrets

namespace: ollama

type: Opaque

stringData:

VPN_SERVICE_PROVIDER: custom

VPN_TYPE: wireguard

WIREGUARD_ADDRESSES: 192.168.3.5/24

WIREGUARD_PRIVATE_KEY: "A...="

WIREGUARD_PUBLIC_KEY: "j...="

WIREGUARD_ENDPOINT_IP: "15.45.124.28"

WIREGUARD_ENDPOINT_PORT: "51820"

WIREGUARD_ALLOWED_IPS: "0.0.0.0/0,10.10.0.0/16"

WIREGUARD_PRESHARED_KEY: "V...="

DOT: "off"

VPN_INTERFACE: wg0

SHADOWSOCKS: "on"

TZ: America/Los_Angeles

DNS_KEEP_NAMESERVER: "on"

WIREGUARD_MTU: "1420"

FIREWALL_INPUT_PORTS: 80,8388,9999 # 80: WebUI, 8388 Socks Proxy, 9999 Kube Probes

FIREWALL_OUTBOUND_SUBNETS: 10.244.0.0/16 # Allow access to k8s subnets

FIREWALL_DEBUG: "true"

HEALTH_SUCCESS_WAIT_DURATION: 10s

iptables tweaks so that Gluetun would play nicely with BGP

Below is the Kubernetes ConfigMap that I created which contains both the nginx.conf that I used as my reverse proxy, but also the iptable-rules ConfigMap that is mounted to the Gluetun container. This ConfigMap represents a file that is mounted to the Gluetun container, with each line being a new iptables command that will be run after the VPN connection is established.

Gluetun `iptables` configmap

---

apiVersion: v1

kind: ConfigMap

metadata:

name: ollama-proxy-config

namespace: ollama

data:

nginx.conf: |

events {

}

http {

server {

listen 80;

server_name _;

location / {

proxy_pass http://10.10.0.10:11434;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# WebSocket support

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

# Increase timeouts for long-running Ollama requests

proxy_read_timeout 300s;

proxy_connect_timeout 75s;

}

}

}

---

apiVersion: v1

kind: ConfigMap

metadata:

name: iptable-rules

namespace: ollama

data:

post-rules.txt: |

ip route add 10.10.0.0/16 dev wg0

Conclusion

After creating these resources and then tweaking them based on your need, you should then have a working Wireguard connection in whatever containers you have running alongside Gluetun.